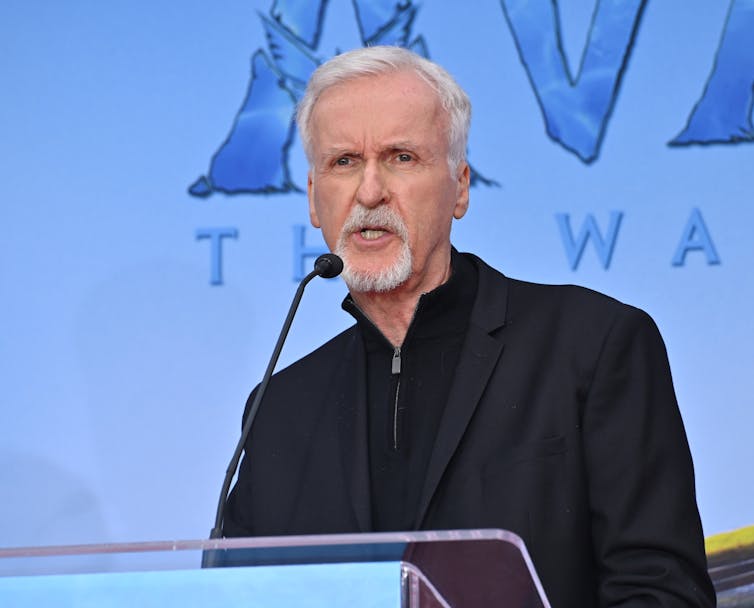

While many people in the creative industries are worrying that AI is about to steal their jobs, Oscar-winning film director James Cameron is embracing the technology. Cameron is famous for making the Avatar and Terminator movies, as well as Titanic. Now he has joined the board of Stability.AI, a leading player in the world of Generative AI.

In Cameron’s Terminator films, Skynet is an artificial general intelligence that has become self-aware and is determined to destroy the humans who are trying to deactivate it. Forty years after the first of those movies, its director appears to be changing sides and allying himself with AI. So what’s behind this?

Valued at around a billion dollars, Stability.AI was, until recently at least, headquartered above a chicken shop in Notting Hill. It is famous for Stable Diffusion, a text-to-image tool that creates hyperreal pictures from text requests (or prompts) by its users. Now it is moving into AI-created video.

Cameron appears to see their work as a potential game changer in film visual effects: “I was at the forefront of CGI over three decades ago, and I’ve stayed on the cutting edge since. Now, the intersection of generative AI and CGI image creation is the next wave,” he commented in a media release from Stability.AI.

Filmmakers supplement the live action reality that they shoot with two kinds of effects: special effects (SFX) and visual effects (VFX). They come at two different stages of film production. During the shoot, SFX are all the physical effects used to create spectacle – explosions, blood squibs, vehicle crashes, prosthetics, mechanical movement of sets.

During postproduction, VFX are the digital systems that add new elements to live-action filmed images – computer-generated imagery (CGI), compositing, motion capture rendering. They also combine separately shot images together.

Paul Smith-Featureflash / Shutterstock

A recent development of film technology, Virtual Production, has brought some VFX techniques into the film shoot. This process uses what are known as “games engines” – a technology developed for the creation of video games. Actors are filmed in front of sophisticated LED walls, which screen dynamic, pre-produced virtual worlds around the performer.

The real-world physicality of SFX means that artificial intelligence will have very limited impact here. It is in VFX where AI may have a transformative effect. I’ll be talking about the subject of deepfakes and AI in film at a public lecture on October 30, 2024: ‘Deepfakes and AI in film and media: seeing is not believing’.

We are also investigating the subject through the Synthetic Media Research Network, a group that I co-lead which brings together film creatives, academic researchers and AI developers. I spoke to a member of this collective, Christian Darkin, a VFX artist who now works as Head of Creative AI for Deep Fusion Films.

He sees the impact of generative AI on VFX as creating infinite choice in post-production. In future, filming the actors will be just the beginning. “You’ll put in the background later, you’ll change the camera angles, you’ll change the expressions, you’ll ramp up the emotion in the acting, you’ll change the voices, the costumes, the people’s faces, everything,” Christian told me.

One key motive for the film industry’s incorporation of AI into VFX is simple: the expense of traditional VFX. If you have watched the end credits of a blockbuster movie, you’ll have seen the number of VFX technicians that they employ. Generative AI offers a cheaper way to achieve spectacular screen images, potentially with no loss of quality.

The implication is that a lot of VFX technicians will lose their jobs as a result. However, in conversations that I have had with people working in these roles there’s a sense that, being highly skilled and technologically savvy, they will probably move into new roles in emerging areas of tech.

The ethics of AI technology

Media creatives are now presented with a huge selection of generative AI Tools that offer new ways of creating images, text, voices and music. However, a key problem related to the technology still needs to be addressed: have these AI tools been created ethically?

Each generative AI tool, from ChatGPT to Midjourney to Runway, rests on a foundation model that has been exposed to vast amounts of data, often from the internet, in order to help it improve at what it does. This process is called “training”.

AI developers build huge reservoirs of training data by using “crawlers”, bots that scour the internet for useful material and download trillions of files for their own use. This can include books, music, images, the spoken word and videos, created by artists who retain copyright over their material.

Stability.ai has been involved in a legal action over copyright in the UK courts. Getty Images, holder of a huge collection of pictures and photographs, is currently suing the company.

A former executive at Stability.ai, Ed Newton-Rex, resigned in November 2023 over the company scraping for creative content to train the model, without payment and claiming it is “fair use”.

Perhaps Cameron thinks that the AI developers will win the court cases against them and continue their technological trajectory. I asked Stability.ai if, before Cameron joined the company, they had scraped any of his creative material from the internet to use as training data for their foundation models – and did they ask his permission?

Their response was: “We’re not able to comment on the source of Stability

AI’s training data.”

Cameron’s Terminator films warned about the potential catastrophic effects of rogue AI. Yet the director now clearly thinks that he is now sitting on a winning horse.